Understanding the development trends of large language models (LLMs) is essential for navigating the future of AI. In this article, we’ll delve into the latest trends in LLM development for 2025, covering advancements in efficiency, multimodal capabilities, domain-specific models, autonomous agents, ethical concerns, synthetic data, reinforcement learning, open-source contributions, prompt engineering, and enterprise utilization. Keep reading to stay updated on how these trends could shape the future of large language models and the next wave of AI innovation.

Key Takeaways

– The evolution of large language models (LLMs) reflects a shift from simple tools to sophisticated systems that can proactively meet business needs and enhance productivity.

– Efficiency and scalability are essential for the continued growth of LLMs, with advancements in techniques like quantization and Low-Rank Adaptation supporting the ‘Green AI’ movement for sustainable AI practices.

– The rise of domain-specific LLMs and multimodal capabilities is transforming industries by enabling customized solutions and enhancing user interactions across various media formats.

Evolution of Large Language Models

The origins of large language models can be traced back to several key milestones:

– Mid-20th century: Pioneers like Alan Turing laid the groundwork for artificial intelligence.

– 1950s: Early language processing initiatives focused on machine translation and basic natural language processing tasks.

– Initial efforts were primarily rule-based.

– 1990s: Introduction of statistical language models marked a significant shift.

– Statistical models allowed for more adaptable and accurate language processing, paving the way for more sophisticated systems.

The development of Convolutional Neural Networks (CNNs) in the 1990s provided new capabilities for certain natural language processing tasks, further enhancing the field. However, it was the introduction of the Transformer architecture in 2017 that truly revolutionized the world of large language models. The Transformer enabled parallel processing and improved sequence handling, which significantly boosted the efficiency and performance of these models. OpenAI’s GPT-1, released in 2018, was a landmark achievement that introduced the concept of generative pre-training for language models.

The evolution of large language models (LLMs) includes:

– GPT-1: The initial model that started the progression.

– Google’s BERT (2018): Set new benchmarks in natural language understanding through its bidirectional training approach.

– GPT-3 (2020): A groundbreaking model with 175 billion parameters, showcasing advanced capabilities in various language tasks.

– GPT-4 (2023): Significantly larger and more powerful, enabling enhanced processing capabilities for complex tasks, as seen in popular large language models. This evolution highlights the significance of a large language model in advancing the field.

These advancements reflect the transition of LLMs from reactive responders to proactive business agents, capable of performing a wide array of functions — a trajectory that shapes the LLM technology landscape and future outlook.

The journey of LLMs from their early days to the present highlights a fundamental transformation. They have evolved from tools that could perform simple tasks to sophisticated systems that can generate human-like responses and even anticipate business needs. This evolution signifies a shift in the role of AI from being a mere gadget to becoming a reliable partner in various industries. As we look to the future, the development of LLMs will continue to push the boundaries of what artificial intelligence can achieve, informing the future of large language models.

Efficiency and Scalability in LLMs

As the capabilities of large language models continue to expand, so does the need for these models to be more energy efficiency and scalable. This is not only a matter of performance but also of sustainability. The ‘Green AI’ movement highlights the need for smaller, efficient models that reduce energy consumption and support broader environmental goals. Techniques such as quantization, which reduces model weight precision to lower-bit integers, play a crucial role in cutting down memory and computational needs.

Another significant advancement is Low-Rank Adaptation (LoRA), which allows fine-tuning of large models with fewer computational resources by decomposing updates. Flash Attention is another innovative technique that optimizes attention computation in transformers, reducing memory usage and increasing speed. Implementing efficient caching can also significantly improve response times by reusing previous outputs, enhancing the overall user experience.

Model parallelism, which distributes models across multiple devices, enables the functioning of larger models without overwhelming individual hardware units. Additionally, effective cloud-based services allow organizations to scale resources dynamically without incurring high initial hardware costs.

These advancements are crucial as the global LLM market continues to grow, driven by the increasing demand for specialized LLMs, llm trends, and the need for efficient solutions in various industries — all central to the latest trends in LLM development.

The integration of multimodal capabilities into AI systems represents a significant leap forward in the functionality and versatility of large language models. These advancements enable LLMs to process and generate not just text, but also images, audio, and video, thereby broadening their applicability. This evolution towards multimodal AI is set to enhance accessibility for users with disabilities by allowing various input and output formats.

Incorporating multimodal features into LLMs can significantly improve user interaction by combining various data forms, such as audio and visual inputs. This capability is particularly beneficial in customer engagement strategies, where personalized and context aware outputs can lead to better user experiences.

The rise of multimodal AI is also expected to influence content creation by enabling richer multimedia experiences, such as generating video summaries based on textual analysis. Future LLMs will focus on offering coherent responses by understanding context from multiple modalities, improving their usability in diverse applications.

By synthesizing textual and visual data, multimodal models open new opportunities for various applications, from virtual assistants to complex data analysis. These advancements highlight the ongoing transformation of AI technology, making it more adaptable and responsive to a wide range of user needs and exemplifying emerging LLM use cases and innovations within the LLM technology landscape and future outlook.

Domain-Specific LLMs

The rise of domain-specific large language models is a testament to the increasing need for specialized solutions tailored to specific industries. Customization of language model enhances user interactions, making them more intuitive and dependable. Companies are now offering customizable models that can be fine-tuned to better suit the language and needs of specific industries, such as finance, healthcare, and law.

As of 2025, it is projected that:

– 50% of digital tasks in financial institutions will be automated using specialized language models.

– This demand is driven by the necessity for improved compliance, accuracy, and efficiency in specialized tasks.

– Significant companies like Google, Microsoft, and Meta are developing proprietary, customized LLMs to better serve specific sectors.

– Seventy percent of firms are investing in generative AI research, indicating a strong interest in domain-specific models.

The flexibility of open-source business models allows businesses to fine-tune them with domain-specific data, enhancing their relevance and accuracy. For instance, businesses can enhance their internal search capabilities using LLMs and sparse expert models, enabling understanding of natural language queries.

Additionally, LLMs can assist developers by generating code snippets or SQL queries, integrating with tools like GitHub and Azure DevOps. These advancements demonstrate how domain-specific language models are gaining traction in software development, enabling businesses to fine-tune AI for specific applications and improving accuracy and compliance. This wave of specialization is accelerating LLM adoption in enterprises across regulated industries.

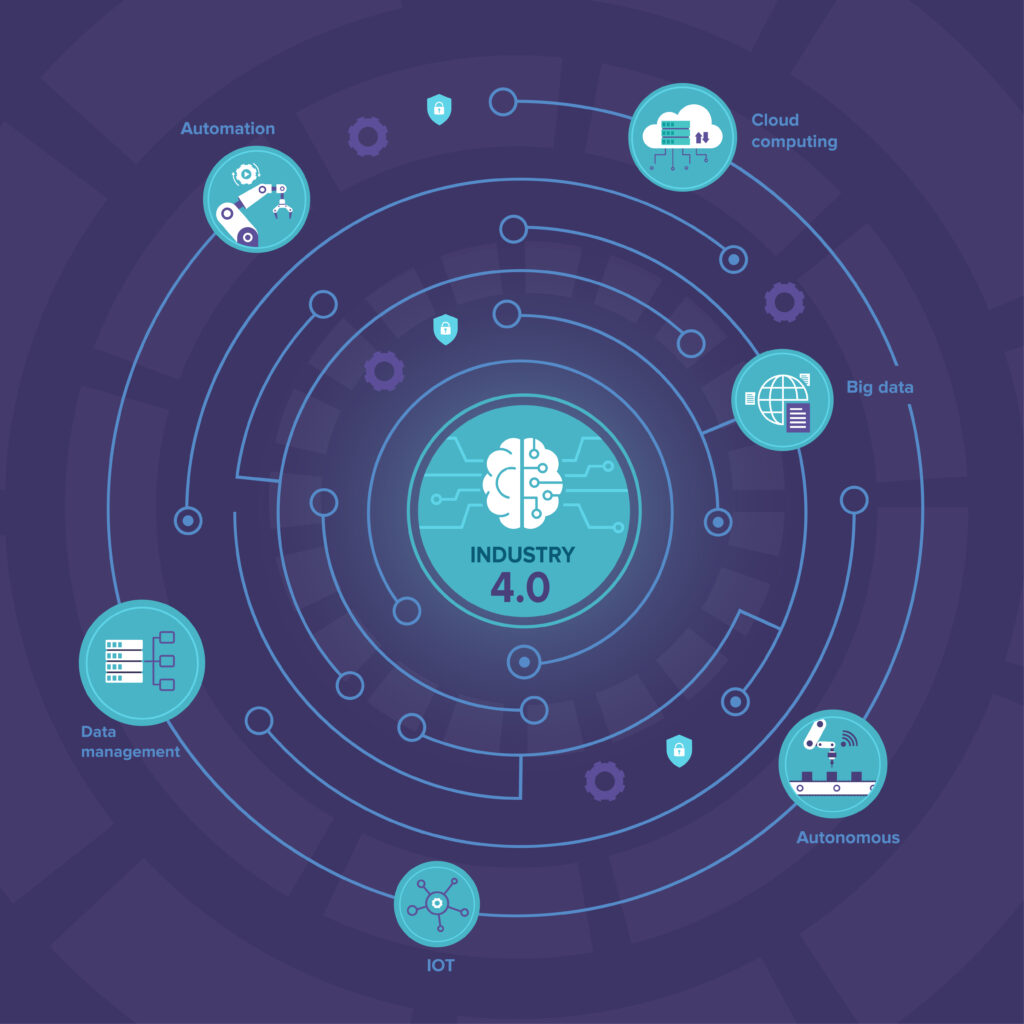

Autonomous Agents and AI Systems

In 2025, the emergence of autonomous agents powered by LLMs is expected to fundamentally change work dynamics, enhancing efficiency and automation across various sectors. These AI systems can perform tasks such as making purchases, scheduling meetings, and handling customer support efficiently. The development of AI agents involves creating robust mechanisms for risk management, including rollback actions and audit logs.

Key pillars for designing and deploying LLM-driven autonomous agents include:

– Planning

– Memory

– Tools

– Control Flow

Integrating these elements enables AI agents to perform complex tasks autonomously, significantly boosting productivity. The integration of AI agents into enterprise environments requires organizations to be prepared with appropriate APIs and data structures. Companies that adopt agentic systems will stay ahead of the competition through faster innovation.

By 2028, 33% of enterprise applications are expected to include autonomous agents. Companies such as Relevance AI are utilizing autonomous agents. They aim to transform both back-office functions and customer interactions. The purpose of the MCP framework is to orchestrate complex agent behaviors and automate tasks.

As we look to the future, these advancements underline the potential of AI agents to augment human expectations and roles rather than replace them, fostering collaboration and enhancing productivity. This agentic shift represents one of the most dynamic emerging LLM use cases and innovations shaping the future of large language models.

Ethical Considerations and Bias Mitigation

Bias in AI systems can lead to skewed outputs that do not align with ethical standards, raising concerns about trust and fairness. Techniques like MinDiff focus on balancing errors across demographic groups, while Counterfactual Logit Pairing ensures consistent predictions despite changes in sensitive attributes. These methods are crucial for maintaining fairness and accountability in AI applications.

Ethical AI practices include guidelines promoting fairness and accountability, essential for regulatory frameworks in AI applications. Transparency and accountability are vital for building trust in AI systems and responsible AI development, as they help clarify decision-making processes and support responsible ai practices.

As LLMs continue to evolve, addressing these ethical considerations will be paramount in ensuring that AI systems are both effective and fair, with human oversight.

Synthetic Data for Training

Synthetic data is information generated to mimic real datasets, which is increasingly being used for training large language models. Key aspects include:

– Techniques such as prompt-based generation and retrieval-augmented pipelines are key methods in synthetic data generation.

– Synthetic data can lead to cost savings.

– It enhances the diversity of training examples in model development.

However, challenges associated with synthetic data include potential factual inaccuracies and biased outputs in the generated content. These issues can be mitigated through filtering outputs and utilizing reinforcement learning.

Synthetic data can be generated for both natural language and code domains, enhancing the training datasets for various applications. As the demand for diverse and extensive training data continues to grow, synthetic data will play a crucial role in the development of more robust and versatile LLMs.

Reinforcement Learning and Human Feedback

Reinforcement learning from human feedback (RLHF) enhances language models by allowing them to learn from constant human input rather than just a fixed reward framework. RLHF typically involves three steps: collecting feedback, modeling rewards based on that feedback, and optimizing the model’s policy to align with user preferences. Human feedback can take various forms, including ranking outputs and providing corrections, each with distinct advantages and challenges.

Despite its benefits, RLHF carries risks such as amplifying evaluator biases and producing outputs that may not be scientifically accurate. The effectiveness of RLHF is amplified when a diverse group of feedback providers is involved, as it helps to address biases in model outputs. To improve RLHF, it is suggested that the selection of evaluators should reflect a broader diversity of social, cultural, and ethical perspectives.

The integration of RLHF into LLMs represents a significant advancement in making these models more aligned with human values and expectations. This approach ensures that AI systems can provide more accurate and relevant responses, ultimately enhancing their usability and effectiveness in various applications.

Open-Source LLM Development

The evolution of large language models has seen significant contributions from open-source initiatives, democratizing access to advanced language technologies. Key benefits of open-source large language models include:

– Accelerating innovation through a community-driven approach

– Facilitating rapid advancements in technology

– Driving AI adoption across industries

– Allowing organizations to customize and deploy solutions without incurring high licensing costs

Open-source LLMs can enhance data security by allowing organizations to keep sensitive data on their private infrastructure. Ensuring security, managing misuse risks, and maintaining quality standards are primary challenges faced by open LLMs. However, the benefits of open-source frameworks in reducing barriers and accelerating innovation in AI development are significant. These models attract vibrant communities that evolve rapidly, contributing to ongoing technological advancements. As a result, open initiatives remain central to the LLM technology landscape and future outlook.

As the global LLM market continues to grow, the role of open-source ai models in shaping the future of AI cannot be underestimated. They provide a platform for collaboration and innovation, driving the development of more efficient and versatile AI systems.

Prompt Engineering and Chain-of-Thought Reasoning

Prompt engineering is evolving to become an essential skill integrated into AI workflows. This practice involves crafting prompts that guide the AI to generate desired outputs more effectively. In 2024, advancements in chain-of-thought (CoT) prompting have emerged, revolutionizing the way AI systems process and generate responses. The full potential of AI systems will rely on continued improvements in prompt engineering and CoT prompting, both pivotal to the future of large language models.

Recent innovations such as the tree-of-thought approach enhance reasoning capabilities by optimizing the search for better logical paths. Chain-of-thought decoding is evolving to generate more explicit reasoning paths, though these paths may not always be the most effective. The method of Chain of Preference Optimization (CPO) fine-tunes LLMs to improve reasoning paths by leveraging tree-searching techniques. Fine-tuning LLMs with CPO can enhance performance in diverse tasks like question answering and fact verification without excessive inference costs.

These advancements are significant for various applications, from code generation to educational tools. By leveraging prompt engineering and CoT techniques, developers can achieve more accurate and contextually relevant model responses. As AI continues to evolve, the integration of generative ai tools and these methodologies will play a crucial role in enhancing the logical reasoning and overall performance of large language models, including ai generated code.

Integration into Enterprise Workflows

Large language models are poised to become deeply integrated into enterprise workflows, transforming how businesses operate. In customer service, LLMs can automate support by providing dynamic, human-like responses in chatbots and help desks. Real-world examples show that LLMs can significantly reduce ticket handling time in IT service desks by automating responses, thereby improving efficiency and customer satisfaction. As LLM adoption in enterprises accelerates, these gains compound across departments from operations to finance.

Document processing is another area where LLMs can make a substantial impact. These models can extract and summarize data from various business documents, streamlining operations and reducing manual labor. Organizations can utilize open-source LLMs to avoid vendor lock-in, giving them the freedom to modify and redistribute the models as needed.

Moreover, the integration of LLMs into enterprise-grade solutions can enhance developer productivity by automating repetitive tasks and generating code snippets. Leveraging these capabilities allows businesses to significantly improve resource consumption and workflow efficiency. As LLMs continue to evolve, their role in enterprise environments will become increasingly vital, driving innovation and productivity across various sectors — a core theme within the LLM technology landscape and future outlook.

Future Outlook for LLMs

The future outlook for large language models is incredibly promising, with innovations in automation and creative collaboration driving efficiency and sustainability. Key advancements include:

– The development of smaller, more efficient models

– The rise of domain-specific applications to improve accuracy and reduce costs

– Enhancements in techniques like few-shot and zero-shot learning, enabling faster deployment and adaptation to market changes.

Key future trends and priorities include:

– Efforts to optimize training techniques and improve hardware efficiency to promote sustainability.

– Increasing integration of LLMs into enterprise solutions for customer service and HR, enhancing productivity through task automation.

– The rise of deep search technologies transforming information retrieval by providing more contextual and reasoning-driven answers, reflecting emerging trends in the industry. Key trends indicate a shift towards these advancements.

As LLMs evolve, they will need to incorporate ethical considerations, balancing ambition with caution to mitigate risks like misinformation and bias. The LLM market is projected to grow significantly, reaching over USD 105 billion in North America by 2030, driven by adoption in finance, healthcare, law, and tech. This growth underscores the transformative potential of LLMs and their role in shaping the future of AI. Collectively, these forces define the LLM technology landscape and future outlook and will continue to shape the future of large language models.

Summary

The journey of large language models from their early beginnings to their current state is a testament to the relentless innovation in the field of artificial intelligence. Key trends such as efficiency and scalability, multimodal capabilities, domain-specific applications, and the rise of autonomous agents highlight the transformative potential of these models. As we look to the future, the integration of ethical considerations and the use of synthetic data for training will be crucial in ensuring the responsible development of LLMs.

In summary, the advancements in large language models are set to revolutionize various industries, driving efficiency, accuracy, and innovation. By staying informed about these trends — the latest trends in LLM development, the future of large language models, and LLM adoption in enterprises — businesses and individuals can harness the power of LLMs to stay ahead in an ever-evolving technological landscape. The future of AI is bright, and large language models will undoubtedly play a central role in shaping it as emerging LLM use cases and innovations continue to expand.

Leave a Reply